MemU

MemU is a agentic memory layer for LLM applications, specifically designed for AI companions

MemU is a agentic memory layer for LLM applications, specifically designed for AI companions

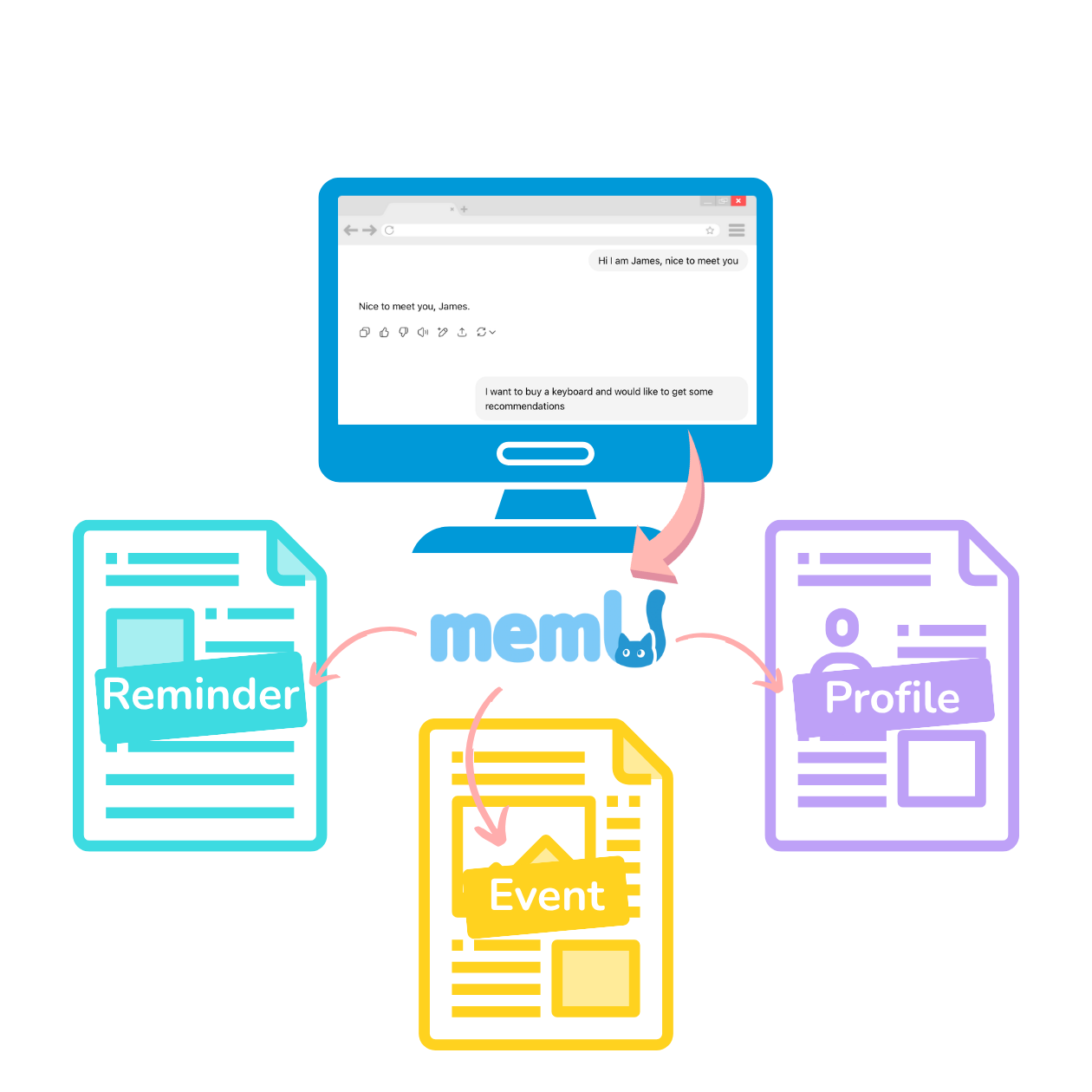

MemU (memu.pro) is an open-source, agentic memory framework designed to give AI agents long-term, structured, and evolving memory. Rather than storing raw vectors, MemU organizes memories like an intelligent file system: it autonomously records, links, summarizes, and selectively forgets entries so AI companions and agents can "remember" users accurately across sessions.

MemU provides a persistent, organized memory layer for LLM-powered applications. Typical capabilities include:

MemU can be used via a hosted cloud service (fastest), self-hosted community edition (full control), or enterprise edition (SLA, integrations). Example quick start (from the project's README) — install the Python client and call the memory API:

# Install the Python client

pip install memu-py

# Example usage (Python)

from memu import MemuClient

import os

memu_client = MemuClient(

base_url="https://api.memu.so",

api_key=os.getenv("MEMU_API_KEY")

)

memu_client.memorize_conversation(

conversation="(long conversation text, recommend ~8000 tokens)",

user_id="user001",

user_name="User",

agent_id="assistant001",

agent_name="Assistant"

)

See the API reference and examples on the project's docs and GitHub for full integration details (webhooks, example agents, and best practices).

MemU is a focused, next-generation memory layer for building AI that truly remembers. It’s particularly strong when you need structured, evolving memories (not just embeddings) and when cost/accuracy tradeoffs matter for persistent agents. If you’re building any kind of long-lived AI companion, roleplay system, or stateful agent, MemU is worth trying — start with the cloud offering for rapid testing, then consider self-hosting or the enterprise edition as needs grow.